Source: CAS Key Laboratory of Speech Acoustics and Content Understanding, IACAS

On July 1st, the results of this year’s Detection and Classification of Acoustic Scenes and Events (DCASE2019) competition, organized by Audio and Acoustic Signal Processing Technical Committee (AASP) in Institute of Electrical and Electronics Engineers (IEEE), was published online. A team from CAS Key Laboratory of Speech Acoustics and Content Understanding, IACAS ranked the first place in the subtask of acoustic scene classification (Task 1A). Prof. ZHANG Pengyuan leaded the team with members including CHEN Hangting, LIU Zuozhen and LIU Zongming.

The DCASE2019 Challenge set up five major tasks, including acoustic scene classification, audio tagging with noisy labels and minimal supervision, sound event localization and detection, sound event detection in domestic environments, and urban sound tagging. Among them, the acoustic scene classification under the matching device was the one with longest history, largest number of participants and the most competitive.

This year, 38 teams participated in the competition, including teams from University of Science and Technology of China, the Chinese University of Hong Kong, Beijing University of Posts and Telecommunications, University of Surrey (UK), Brno University of Technology (Czech Republic) as well as other top universities around world. Teams from well-known companies such as Intel (USA), Samsung (Samsung Research China-Beijing) and LG (Korea) also participated.

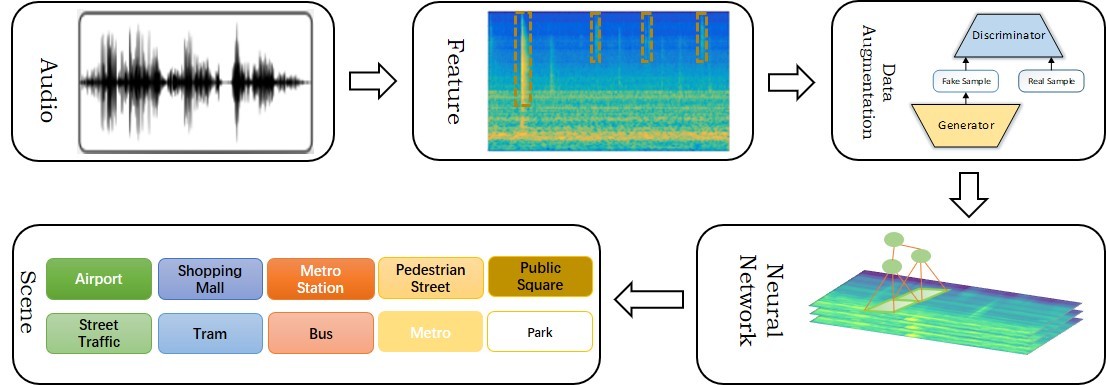

The purpose of acoustic scene classification is to identify specific scenes for recording audios, such as metro, park, airports, so that wearable devices and intelligent robots could perceive surrounding environmental information and make corresponding motion reflections (Figure 1).

Figure 1. The acoustic scene classification system based on deep learning and data augmentation(Image by IACAS)

In real life, acoustic scene recognition can be widely used in mobile devices as well as smart robots. Mobile devices intelligently switch modes by sensing external environmental information. For robots, auditory and visual information complement each other. While in some extreme environments, audio information is relatively easy to perceive.

In this competition, IACAS team explored a variety of long-term and short-term features, combining with deep learning-based data augmentation methods. The submitted system achieved a test accuracy of 85.2%, significantly ahead of the 2nd place 1.4% and far exceeded human.

The DCASE competition was firstly organized in 2013 by Digital Music, Queen Mary University of London and the Analysis/Synthesis team at IRCAM. It has been the most authoritative competition in the field of acoustic context understanding.

Notes:

The median accuracy for human to classify the scenes is about 75%, refer to Barchiesi D , Giannoulis D , Stowell D , et al. Acoustic Scene Classification: Classifying environments from the sounds they produce[J]. IEEE Signal Processing Magazine, 2015, 32(3):16-34.