For voice communication, it is important to suppress the background noise without introducing unnatural distortion. Deep-learning-based speech enhancement approaches could effectively suppress background noise components. However, in the noise-mismatched condition, unnatural residual noise generates and heavily influences the speech comfortableness.

Recently, researchers from the Institute of Acoustics of the Chinese Academy of Sciences (IACAS) proposed a type of supervised speech enhancement approach with residual noise control for voice communication. Based on artificially maintaining low-level residual noise, researchers dedicated to maximizing noise reduction and minimizing speech distortion jointly, leading to better perceptual comfortableness of enhanced speech.

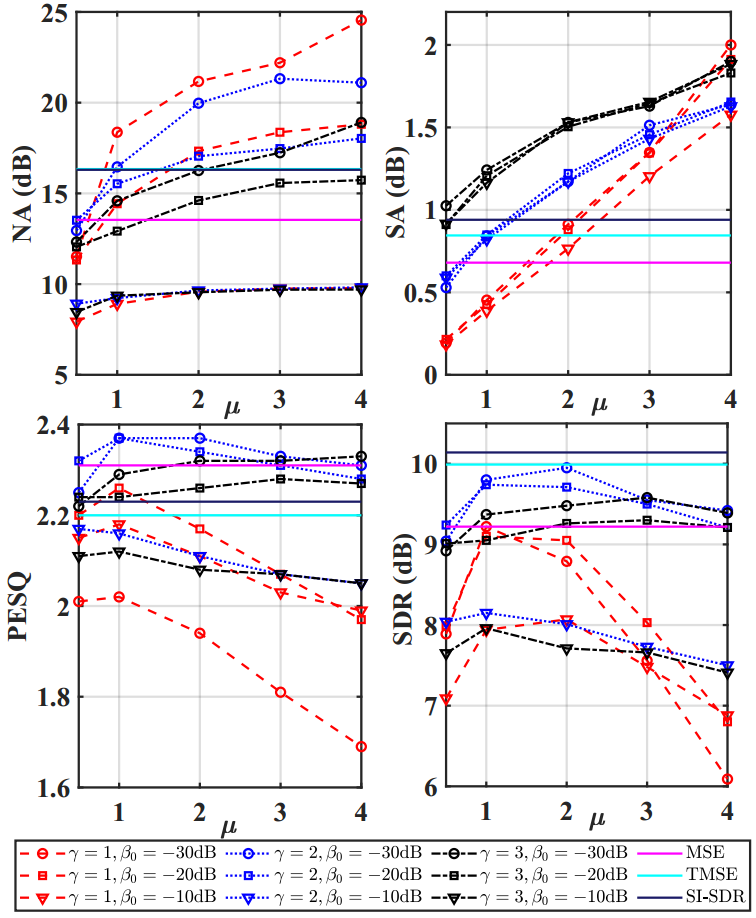

Facing the widely-existing disadvantages of loss functions, researchers introduced multiple adjustable hyper-parameters and derived a generalized loss function. They selected suitable parameter configurations, making the enhanced speech weigh flexibly and effectively between the two objectives. Meanwhile, by introducing low-level background noise, they notably improved the subjective perceptual quality.

Experimental results showed that choosing the suitable parameter configurations could make the enhanced speech outperform previous works in terms of both objective metrics and subjective evaluation results.

Many often-used loss functions could be regarded as special cases of the proposed generalized loss function, thus using the approach above to optimize. This work could be utilized for noise suppression and speech information extraction in the speech communication devices.

The study, published in Applied Sciences, was supported by the National Natural Science Foundation of China.

Objective metric comparisons of different parameter configurations. (Image by IACAS)

Reference:

LI Andong, PENG Renhua. ZHENG Chengshi, LI Xiaodong. A Supervised Speech Enhancement Approach with Residual Noise Control for Voice Communication. Applied Sciences, 2020, 10, 2894. DOI: 10.3390/app10082894

Contact:

ZHOU Wenjia

Institute of Acoustics, Chinese Academy of Sciences, 100190 Beijing, China

E-mail: media@mail.ioa.ac.cn