Previous studies utilizing auditory sequences with rapid repetition of tones reveal that, spatiotemporal cues and spectral cues are important cues used to fuse or segregate sound streams. However, the perceptual grouping is partially driven by the cognitive processing of the periodicity cues of the long sequence.

In this research, whether perceptual groupings (spatiotemporal grouping vs. frequency grouping) could also be applicable to short auditory sequences is investigated, where auditory perceptual organization is mainly sub-served by lower levels of perceptual processing. Two experiments using an auditory Ternus display are conducted.

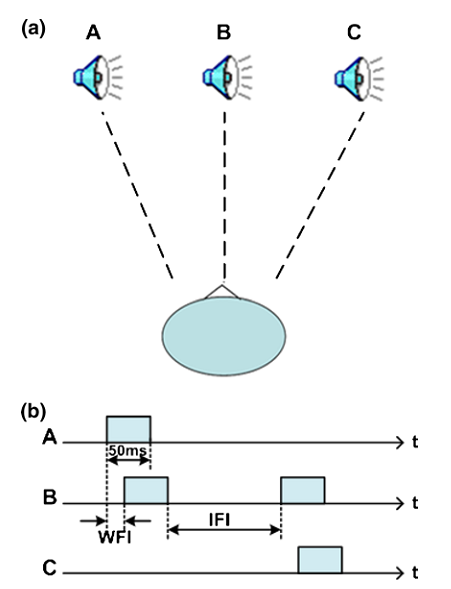

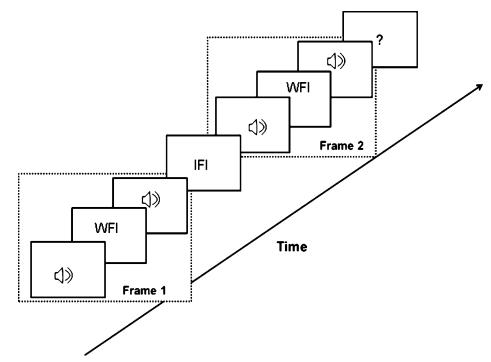

The display is composed of three speakers (A, B and C), with each speaker consecutively emitting one sound consisting of two frames (AB and BC). Experiment one manipulates both spatial and temporal factors. Three ‘within-frame intervals’ (WFIs, or intervals between A and B, and between B and C), seven ‘inter-frame intervals’ (IFIs, or intervals between AB and BC) and two different speaker layouts (inter-distance of speakers: near or far) are implemented. Experiment two manipulates the differentiations of frequencies between two auditory frames, besides the spatiotemporal cues as in Experiment one. Listeners are required to make two alternative forced choices (2AFC) to report the perception of a given Ternus display: element motion (auditory apparent motion from sound A to B to C) or group motion (auditory apparent motion from sound ‘AB’ to ‘BC’).

The experiments results show that the perceptual grouping of short auditory sequences (materialized by the perceptual decisions of the auditory Ternus display) is modulated by temporal and spectral cues. Wherein, the latter contributes more to segregating auditory events. Spatial layout plays a less role in perceptual organization. These results could be accounted for by the ‘peripheral channeling’ theory.

This research is supported by grants from the Natural Science Foundation of China (11174316, 31200760), National High Technology Research and Development Program of China (863 Program) (2012AA011602) and Strategic Priority Research Program of the Chinese Academy of Sciences (XDA06020201).

The research entitled “The Role of Spatiotemporal and Spectral Cues in Segregating Short Sound Events: Evidence from Auditory Ternus Display” has been published in Experimental Brain Research (January 2014, Vol. 232, No.1, pp. 273-282).

Fig. 1 Experimental setup and temporal correspondence of motion streams used in Experiment 1 and Experiment 2. a Three speakers are placed horizontallywith a center-to-center distance of 45 cm in Experiment 1a and 25 cm in Experiment 1b. Participants sit in front of the middle speaker with a viewing distance of 70 cm and are asked to judge their perception of auditory Ternus motion (‘EM’ or GM’). b Temporal correspondences of three sounds: here, sound A, B and C compose two auditory frames (‘AB’ and ‘BC’) with a within-frame interval (WFI, interval between A and B, and between B and C) of 5, 10 or 20 ms for Experiment 1, and 5 or 20 ms for Experiment 2. The inter-frame interval (IFI, the interval between the two frames ‘AB’ and ‘BC’) is selected from between 50–230 ms for Experiment 1 and 30–210 ms for Experiment 2. The frequencies used for the sounds in Experiment 2 are 800 Hz for a standard frame and 820, 860 or 1,000 Hz for a comparative frame. The direction of the auditory motion (leftor right) is randomized and counterbalanced (Image by WANG).

Fig. 2 Schematic illustration of the events presented on one trial in both Experiment 1 and Experiment 2. The auditory stimuli is composed of two sound frames (white noise or tone) separated by a within-frame interval (WFI) and an inter-frame interval (IFI). After the auditory stimuli, a question mark is presented to prompt participants to make a choice between two options (left or right key press) (Image by WANG).

Contact:

BAO Ming

Key Laboratory of Noise and Vibration Research, Institute of Acoustics, Chinese Academy of Sciences, Beijing 100190, China

E-mail: baoming@mail.ioa.ac.cn